Hands on session to train a model based on a dataset in Data Science and this refers to Machine Leaning. The machine is learning your dataset against the model you offered to train such as line regression, classification or others.

1 Source: Get sample dataset

!curl https://topcs.blob.core.windows.net/public/FlightData.csv -o flightdata.csv

2 Ingest: Import Pandas for DataFrame and list some info of dataset for analysis

import pandas as pd

df = pd.read_csv('flightdata.csv')

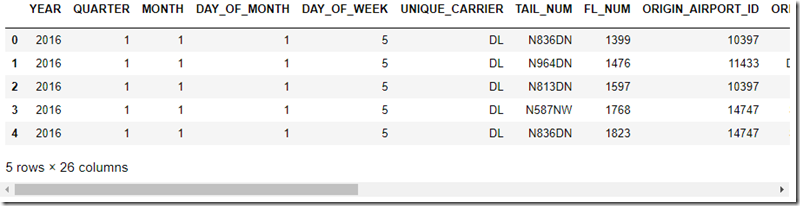

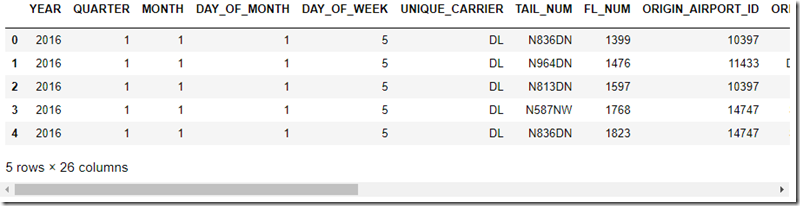

df.head()

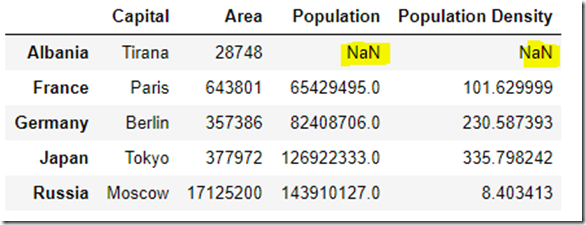

3 Process: In the real world, few datasets can be used as-is to train machine-learning models. It is not uncommon for data scientists to spend 80% or more of their time on a project cleaning, preparing, and shaping the data — a process sometimes referred to as data wrangling. Typical actions include removing duplicate rows, removing rows or columns with missing values or algorithmically replacing the missing values, normalizing data, and selecting feature columns. A machine-learning model is only as good as the data it is trained with. Preparing the data is arguably the most crucial step in the machine-learning process.

Before you can prepare a dataset, you need to understand its content and structure. In the previous steps, you imported a dataset containing on-time arrival information for a major U.S. airline. That data included 26 columns and thousands of rows, with each row representing one flight and containing information such as the flight’s origin, destination, and scheduled departure time. You also loaded the data into the Jupyter notebook and used a simple Python script to create a pandas DataFrame from it.

df.shape #(11231, 26)

list(df)

df.isnull().values.any() #True

df.isnull().sum()

df = df[["MONTH", "DAY_OF_MONTH", "DAY_OF_WEEK", "ORIGIN", "DEST", "CRS_DEP_TIME", "ARR_DEL15"]]

df.isnull().sum()

df[df.isnull().values.any(axis=1)].head()

df = df.fillna({'ARR_DEL15': 1})

df.iloc[177:185]

df.head()

df = pd.get_dummies(df, columns=['ORIGIN', 'DEST'])

df.head()

4 Predict: Machine learning, which facilitates predictive analytics using large volumes of data by employing algorithms that iteratively learn from that data, is one of the fastest growing areas of data science.

One of the most popular tools for building machine-learning models is Scikit-learn, a free and open-source toolkit for Python programmers. It has built-in support for popular regression, classification, and clustering algorithms and works with other Python libraries such as NumPy and SciPy. With Sckit-learn, a simple method call can replace hundreds of lines of hand-written code. Sckit-learn allows you to focus on building, training, tuning, and testing machine-learning models without getting bogged down coding algorithms.

We will use Sckit-learn to build a machine-learning model utilizing on-time arrival data for a major U.S. airline. The goal is to create a model that might be useful in the real world for predicting whether a flight is likely to arrive on time. It is precisely the kind of problem that machine learning is commonly used to solve.

from sklearn.model_selection import train_test_split

train_x, test_x, train_y, test_y = train_test_split(df.drop('ARR_DEL15', axis=1), df['ARR_DEL15'], test_size=0.2, random_state=42)

train_x.shape #(8984, 14)

test_x.shape #(2247, 14)

from sklearn.ensemble import RandomForestClassifier

model = RandomForestClassifier(random_state=13)

model.fit(train_x, train_y)

predicted = model.predict(test_x)

model.score(test_x, test_y)

from sklearn.metrics import roc_auc_score

probabilities = model.predict_proba(test_x)

roc_auc_score(test_y, probabilities[:, 1])

from sklearn.metrics import confusion_matrix

confusion_matrix(test_y, predicted)

from sklearn.metrics import precision_score

train_predictions = model.predict(train_x)

precision_score(train_y, train_predictions)

from sklearn.metrics import recall_score

recall_score(train_y, train_predictions)

5 Visualize: Now that you that have trained a machine-learning model to perform predictive analytics, it’s time to put it to work. In this lab, the final one in the series, you will write a function that uses the machine-learning model you built in the previous lab to predict whether a flight will arrive on time or late. And you will use Matplotlib, the popular plotting and charting library for Python, to visualize the results.

%matplotlib inline

import matplotlib.pyplot as plt

import seaborn as sns

sns.set()

from sklearn.metrics import roc_curve

fpr, tpr, _ = roc_curve(test_y, probabilities[:, 1])

plt.plot(fpr, tpr)

plt.plot([0, 1], [0, 1], color='grey', lw=1, linestyle='--')

plt.xlabel('False Positive Rate')

plt.ylabel('True Positive Rate')

def predict_delay(departure_date_time, origin, destination):

from datetime import datetime

try:

departure_date_time_parsed = datetime.strptime(departure_date_time, '%d/%m/%Y %H:%M:%S')

except ValueError as e:

return 'Error parsing date/time - {}'.format(e)

month = departure_date_time_parsed.month

day = departure_date_time_parsed.day

day_of_week = departure_date_time_parsed.isoweekday()

hour = departure_date_time_parsed.hour

origin = origin.upper()

destination = destination.upper()

input = [{'MONTH': month,

'DAY': day,

'DAY_OF_WEEK': day_of_week,

'CRS_DEP_TIME': hour,

'ORIGIN_ATL': 1 if origin == 'ATL' else 0,

'ORIGIN_DTW': 1 if origin == 'DTW' else 0,

'ORIGIN_JFK': 1 if origin == 'JFK' else 0,

'ORIGIN_MSP': 1 if origin == 'MSP' else 0,

'ORIGIN_SEA': 1 if origin == 'SEA' else 0,

'DEST_ATL': 1 if destination == 'ATL' else 0,

'DEST_DTW': 1 if destination == 'DTW' else 0,

'DEST_JFK': 1 if destination == 'JFK' else 0,

'DEST_MSP': 1 if destination == 'MSP' else 0,

'DEST_SEA': 1 if destination == 'SEA' else 0 }]

return model.predict_proba(pd.DataFrame(input))[0][0]

predict_delay('1/10/2018 21:45:00', 'JFK', 'ATL')

predict_delay('2/10/2018 21:45:00', 'JFK', 'ATL')

predict_delay('2/10/2018 10:00:00', 'ATL', 'SEA')

import numpy as np

labels = ('Oct 1', 'Oct 2', 'Oct 3', 'Oct 4', 'Oct 5', 'Oct 6', 'Oct 7')

values = (predict_delay('1/10/2018 21:45:00', 'JFK', 'ATL'),

predict_delay('2/10/2018 21:45:00', 'JFK', 'ATL'),

predict_delay('3/10/2018 21:45:00', 'JFK', 'ATL'),

predict_delay('4/10/2018 21:45:00', 'JFK', 'ATL'),

predict_delay('5/10/2018 21:45:00', 'JFK', 'ATL'),

predict_delay('6/10/2018 21:45:00', 'JFK', 'ATL'),

predict_delay('7/10/2018 21:45:00', 'JFK', 'ATL'))

alabels = np.arange(len(labels))

plt.bar(alabels, values, align='center', alpha=0.5)

plt.xticks(alabels, labels)

plt.ylabel('Probability of On-Time Arrival')

plt.ylim((0.0, 1.0))

![image[36] image[36]](https://ididtryit.files.wordpress.com/2020/06/image36_thumb.png?w=669&h=280)

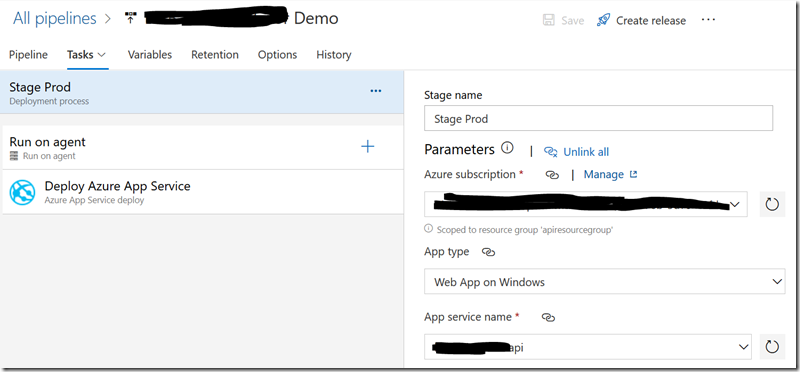

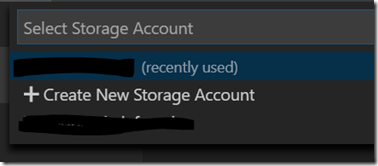

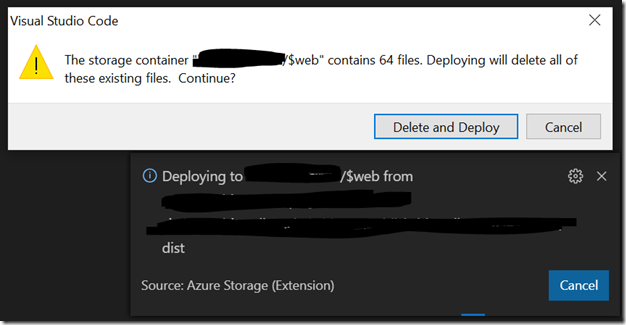

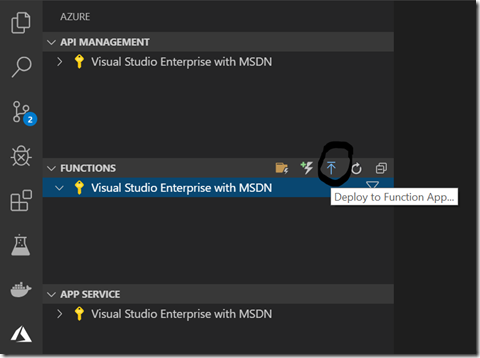

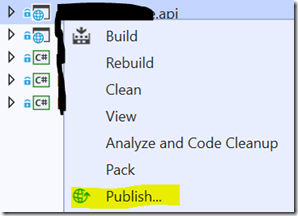

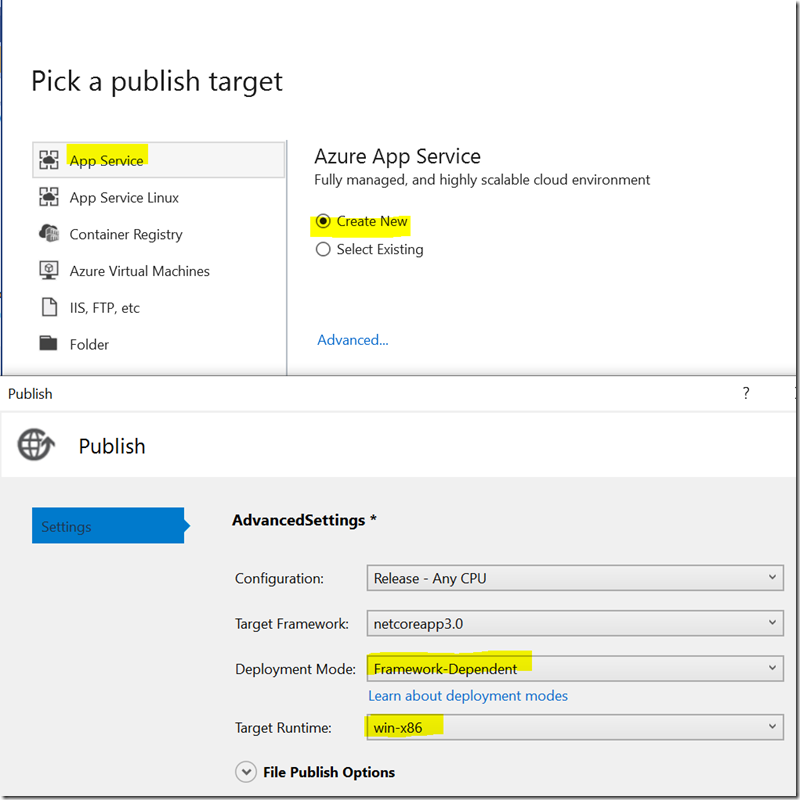

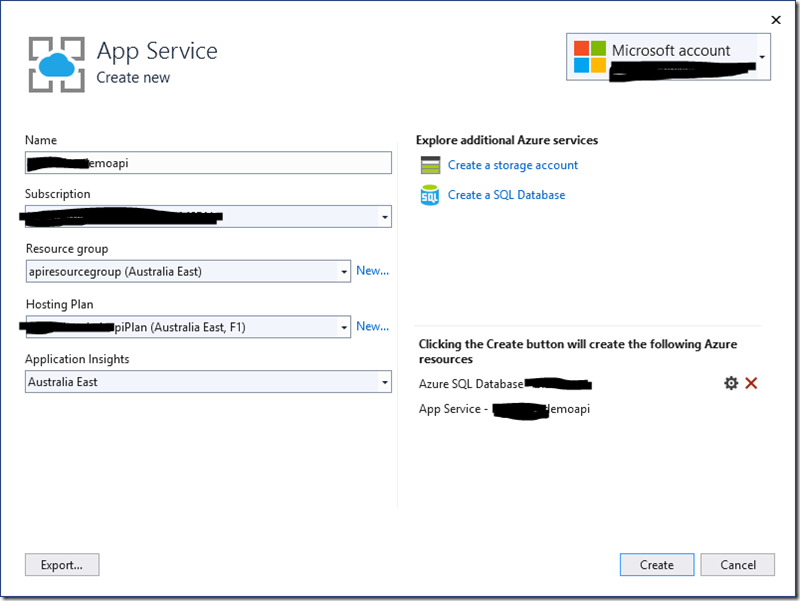

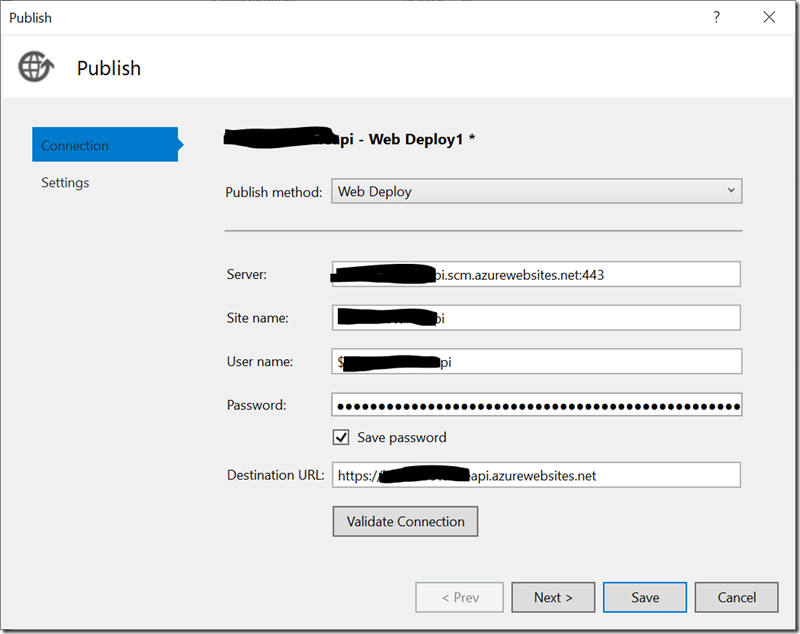

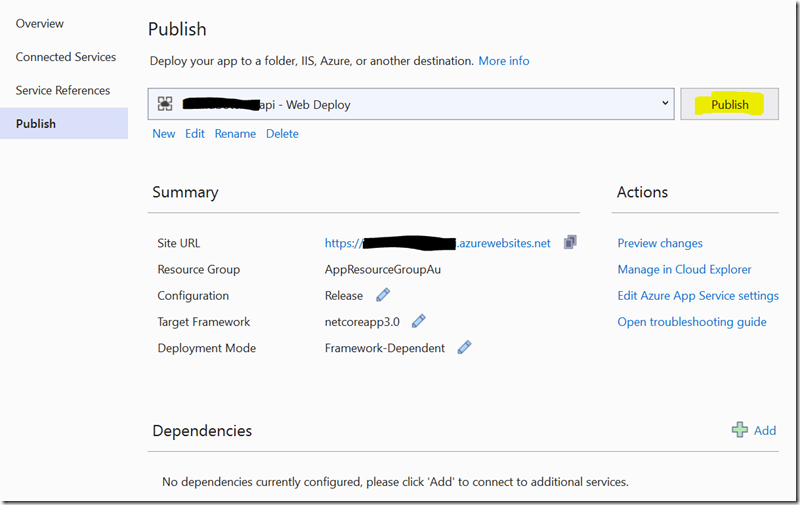

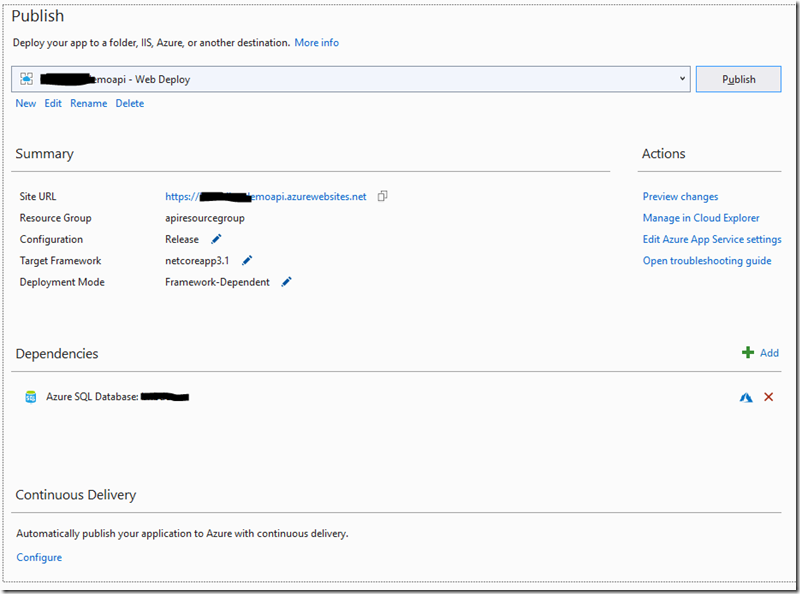

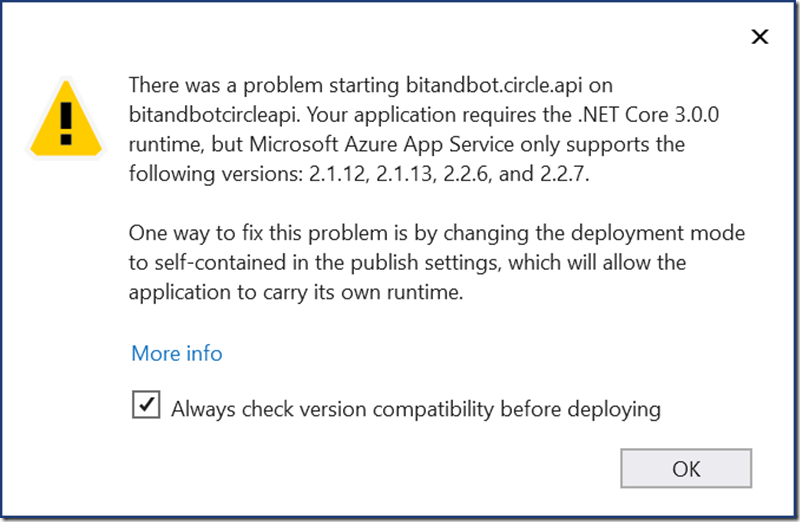

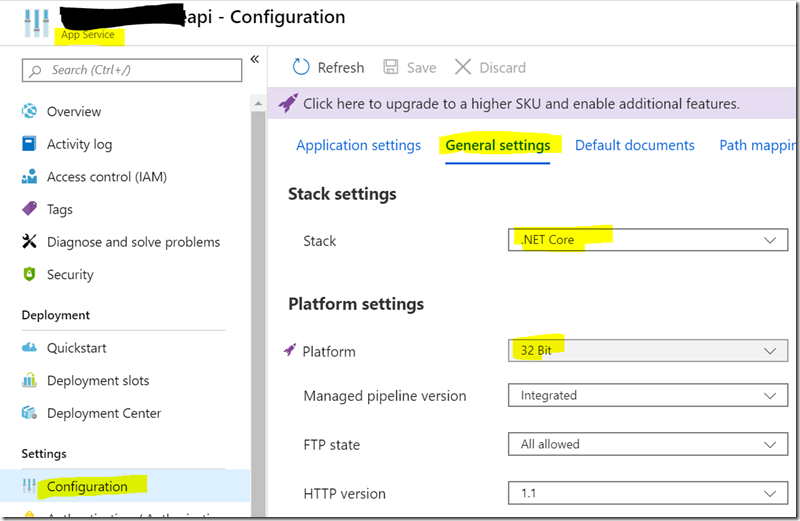

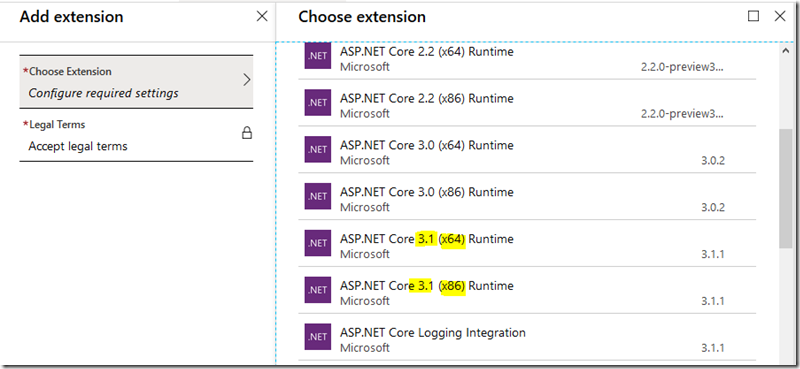

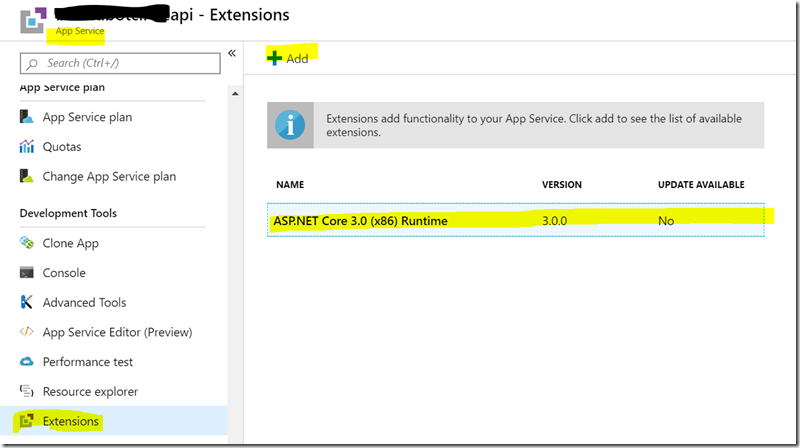

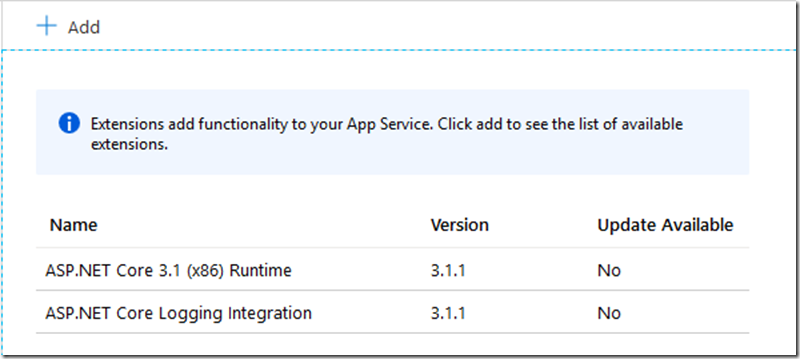

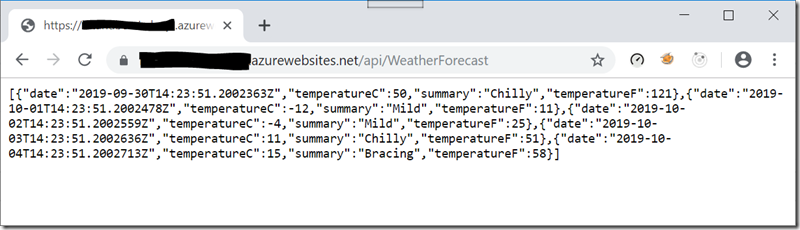

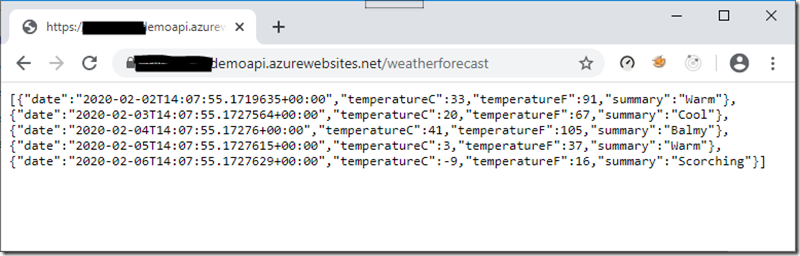

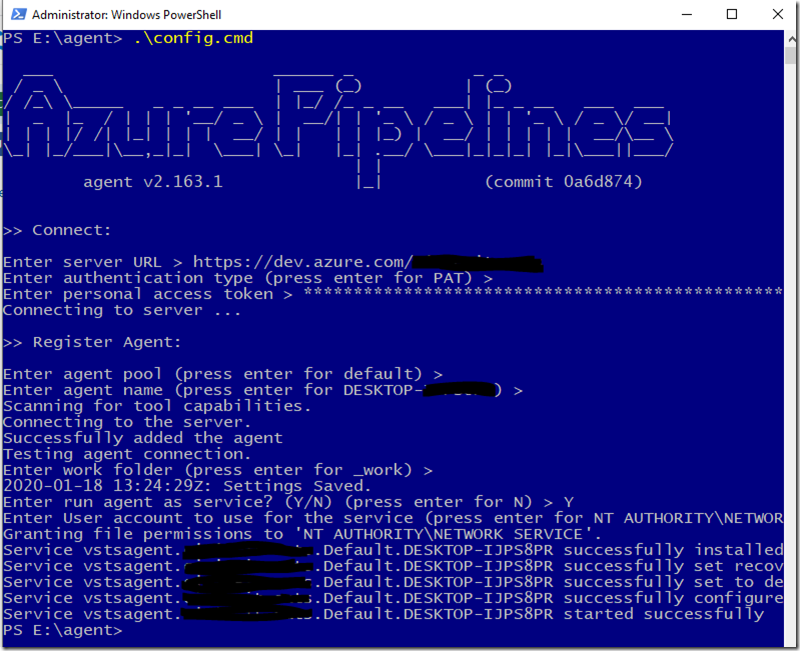

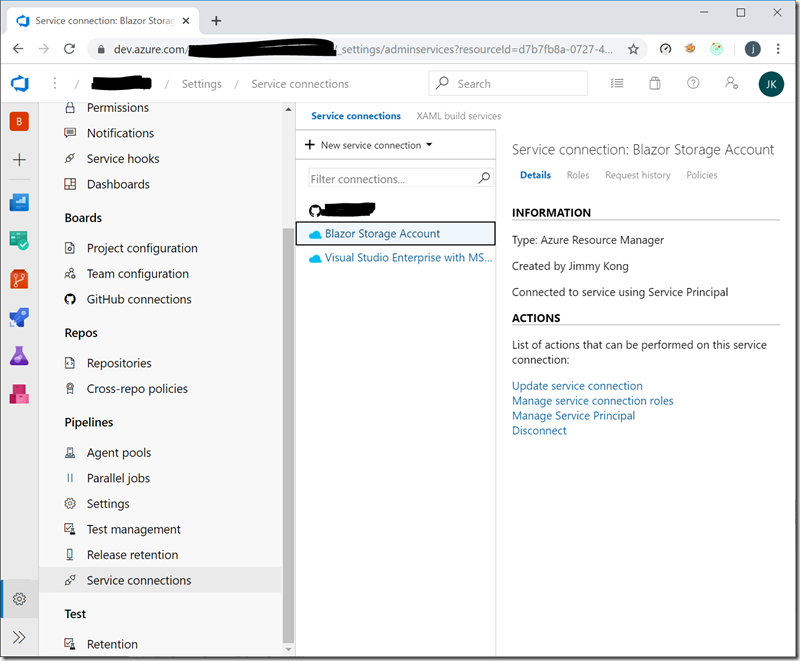

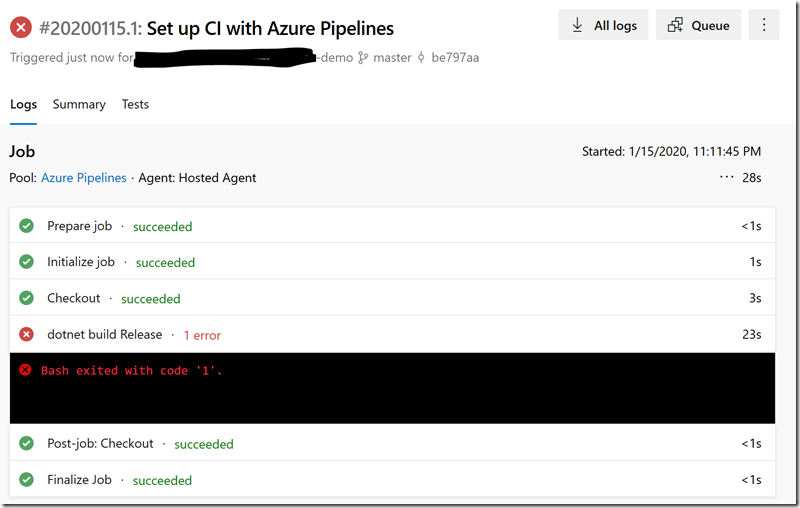

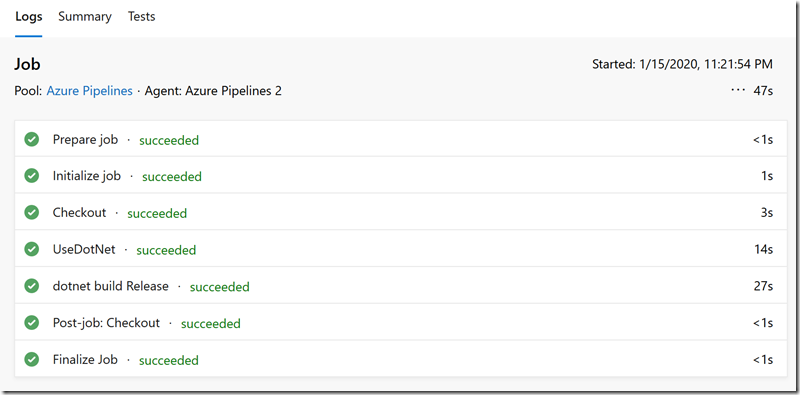

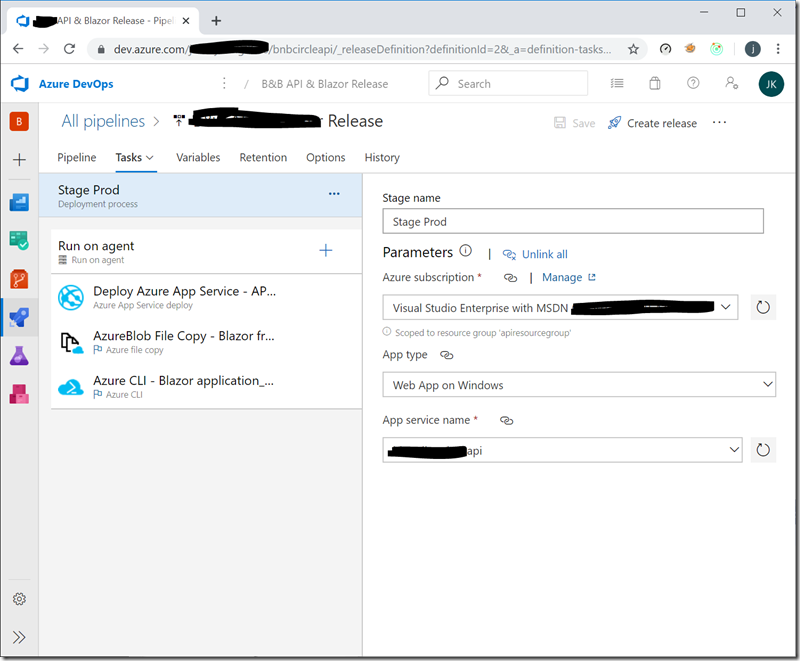

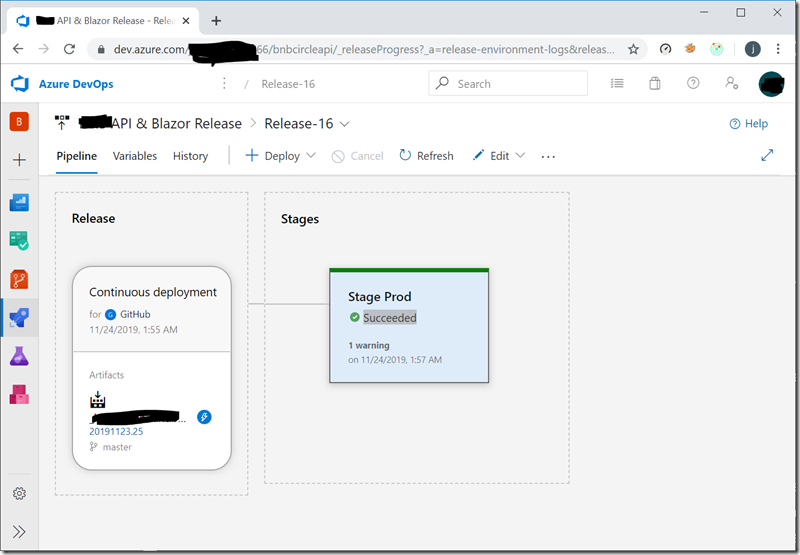

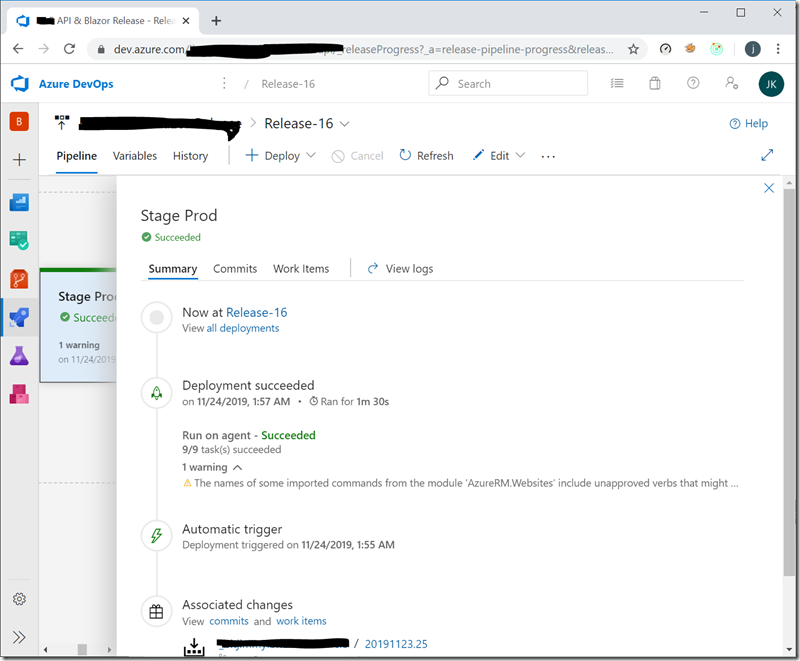

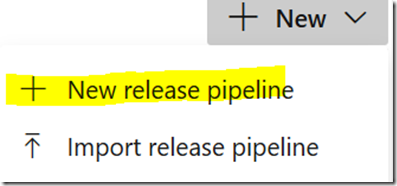

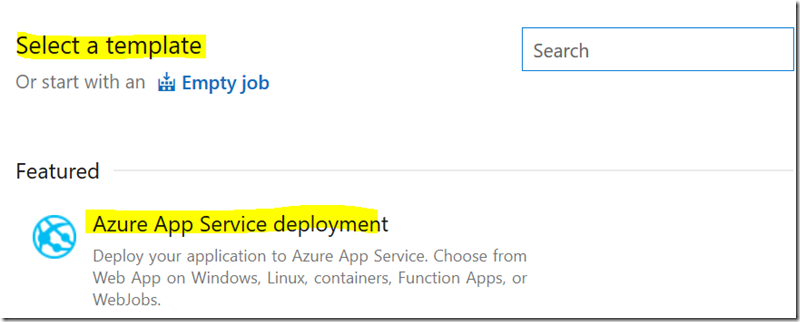

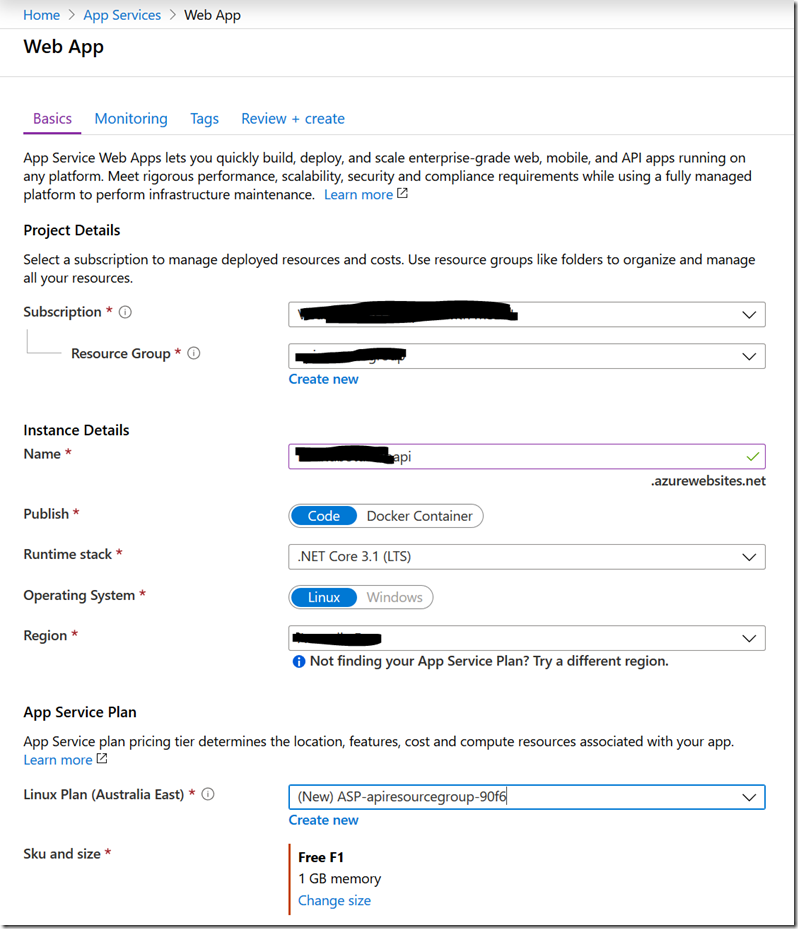

3.5 Create a new App Services via Visual Studio 2019 in where Publish option offers a wizard to create a new App Services in Windows OS platform seamlessly with no issue. This gives me hassle free to setup ‘Deploy Azure App Service’ in CD.

3.5 Create a new App Services via Visual Studio 2019 in where Publish option offers a wizard to create a new App Services in Windows OS platform seamlessly with no issue. This gives me hassle free to setup ‘Deploy Azure App Service’ in CD.